The Consequences of Trusting Computers

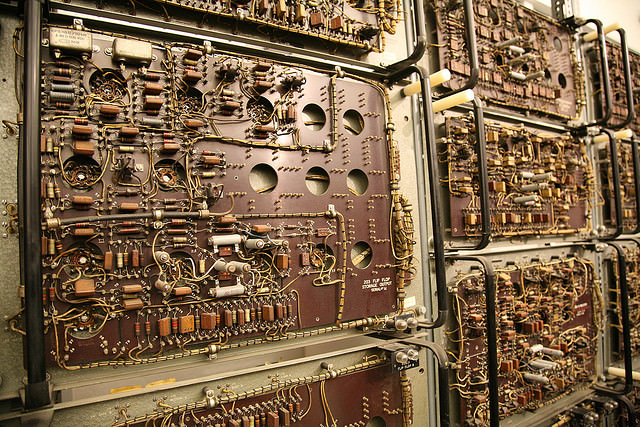

(Image by Laughing Squid)

Computers were created in large measure to solve problems. And the programs that run on computers are designed to solve these problems. And those programs generally run to do exactly what we tell them to do. And much of what we tell them to do is straightforward in the sense that the problems they solve follow the law of non-contradiction, i.e. an answer provided by a computer for a specific problem is either true or not true, but never both simultaneously.

I can program a computer to answer for me the question, “What is three factorial?” The answer provided, hopefully “six”, is either true or not true, but is quite obviously not both.

I’m ignoring some gray areas here, particularly in the places where problems are solved by computers learning, a la genetic algorithms in the case of Roger Alsing’s EvoLisa program or neural nets in the case of GNU Backgammon. But even in these arenas, computers are programmed to perform specific tasks that solve (or approximate) particular problems. For the rest of this post, I’m generally referring to the simpler class of problems, though I will touch on how decisions made within the financial sector over the last several years have in part caused our current global economic situation based on solutions to incomplete mathematical models.

I really started thinking about this issue in relation to the now famous Verizon Math site and associated videos that show just how hapless humans can be when we depend entirely on computers to return the correct answer. What I’m saying here is that we’ve more or less reached the point where we believe that computers will always return the correct answer, and forget that while computer programmers aim to have their programs answer on the “true” side of the law of non-contradiction, sometimes this unfortunately isn’t the case.

If you’d like a poignant example, please watch this video, where several Verizon employees fail to recognize how their computer system has overcharged the customer on the phone. I don’t bring this video up to pick on Verizon specifically, but this is an issue that has gained a lot of attention over the last several months:

Now, here’s the point: Though Verizon is in the wrong, the employees are not willing to recognize the error. And why is this the case? I can think of several reasons.

- Verizon employees are used to hearing customers complain about how they have been mischarged, and generally speaking the customer is wrong.

- These Verizon employees do not understand the math being explained to them by the customer.

- These Verizon employees are trusting what their computer system is telling them without fail.

And I think that all three issues played a part in the lack of understanding of the employees. But the issue that bothers me the most is the third, that the employees infallibly trust their computer system. What bothers me most about this story is that even in the face of blatant mathematical reasoning, the belief of the employees was to side with the answer provided by the computer. And the computer was incorrect. Due to a variety of circumstances, the math provided by the computer program did not match the price quote delivered by Verizon. And rather than viewing the computer as the product of human intellict, they viewed the computer as the objective arbiter.

Using the computer as an objective arbiter is a dangerous business for a variety of reasons, including most notably that the program returning the answer can be incomplete or incorrect. In the case of the recent financial meltdown, at least part of the blame can be placed on mathematical models that viewed sets of risk transactions (e.g. credit default swaps) as independent events. As it turns out, these events were NOT independent. Here’s an articleabout this. But an assumption of the program was to treat them independently. So was the computer wrong? Practically speaking, in retrospect, yes. But I don’t think that’s the right way of looking at it. The computer was answering the question based upon the programmer’s intent. And it was answering the question correctly in that sense.

What’s the moral of the story? Basically, it’s that computers answer problem in EXACTLY the ways they are programmed to do so. No more and no less. Computers are designed to be “right”, but it doesn’t mean that it will always pan out this way. Treating them as flawless objective arbiters is farming out your intellect. And while I’m certainly not saying that computers and their programs can’t be trusted (hell, it’s what I do for a living), I’m also saying that it’s a good idea to treat them as if they’re a product of humanity.